Hugging Face Transformers - Product Review

Hugging Face Transformers Product Overview

Hugging Face Transformers is a leading platform in the field of artificial intelligence, particularly known for its contributions to natural language processing (NLP). Initially starting as a chatbot app, Hugging Face has evolved into a comprehensive open-source platform that provides developers with access to a vast array of pre-trained machine learning models.

These models are designed to perform a variety of tasks, such as text classification, language translation, and sentiment analysis, making them highly valuable for both researchers and developers working in NLP and related fields.

The primary audience for Hugging Face Transformers includes developers, data scientists, and researchers who are interested in building and deploying machine learning models efficiently. The platform is often referred to as the “GitHub of machine learning” due to its community-driven approach, allowing users to share and collaborate on models and datasets.

Key features of Hugging Face Transformers include its extensive library of pre-trained models, which simplifies the process of model training and deployment. The platform supports multiple machine learning frameworks such as PyTorch and TensorFlow, providing flexibility in how models are implemented.

Hugging Face Transformers User Interface and Experience

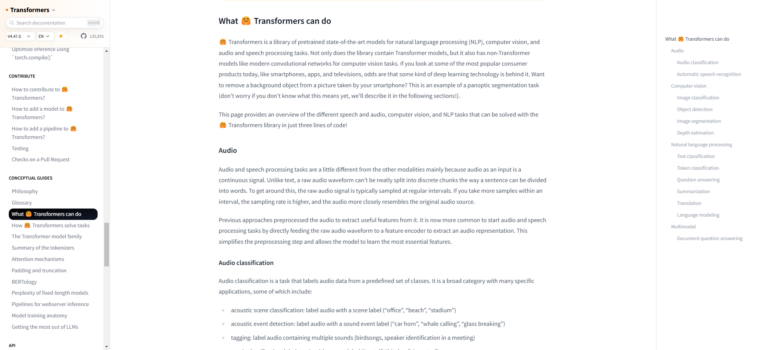

Hugging Face Transformers offers a user-friendly interface that simplifies the process of working with complex machine learning models. The platform’s design focuses on accessibility, making it approachable for both beginners and experienced developers.

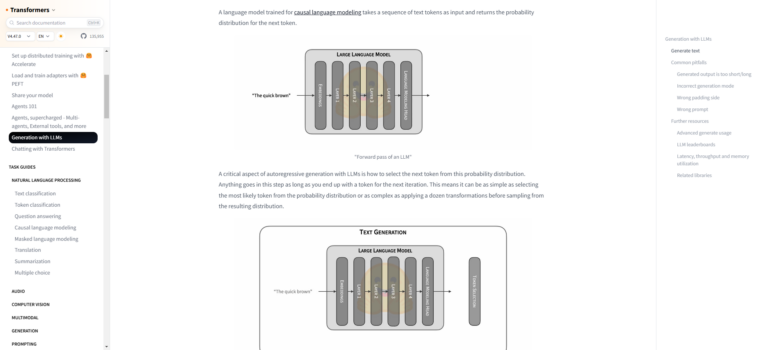

The main interface revolves around the Hugging Face Hub, which serves as a central repository for models, datasets, and demos. Users can easily browse through thousands of pre-trained models and datasets, filtering by task, language, or framework. This streamlined organization helps developers quickly find the resources they need for their projects. One of the standout features of Hugging Face Transformers is its intuitive API. The library provides a consistent interface across different models and tasks, allowing users to switch between models with minimal code changes. This uniformity significantly reduces the learning curve and makes it easier to experiment with various approaches.

The platform’s ease of use is evident in its pipeline functionality. With just a few lines of code, users can implement complex NLP tasks like text classification, named entity recognition, or question answering. This abstraction of underlying complexities allows developers to focus on their specific use cases rather than getting bogged down in implementation details. Hugging Face also offers interactive tools like Spaces, which allows users to create and share demo applications for their models without requiring extensive technical knowledge. This feature enhances the overall user experience by providing a visual and interactive way to showcase and test models.

However, it’s worth noting that some users have reported challenges with the platform. Issues such as bugs, complex configurations, and occasional documentation gaps have been mentioned. Some developers have found that while the surface-level interface is user-friendly, diving deeper into customization or troubleshooting can be more challenging.

Despite these concerns, the overall user experience of Hugging Face Transformers is generally positive. The platform’s commitment to open-source principles and its active community contribute to a collaborative and supportive environment. Users can easily access help through forums, documentation, and community contributions, which enhances the overall experience.

In summary, Hugging Face Transformers provides a user interface that balances simplicity with powerful functionality. While there may be some complexities when delving into advanced usage, the platform’s design philosophy of making AI accessible shines through in its user-friendly approach to model implementation and deployment.

Hugging Face Transformers Key Features and Functionality

Transformers Library

The Transformers library is at the heart of Hugging Face. It provides access to thousands of pre-trained models that can handle various NLP tasks, such as:

- Text Classification: Categorizing text into predefined labels.

- Named Entity Recognition (NER): Identifying entities like names and organizations in text.

- Question Answering: Finding answers to questions based on given context.

- Machine Translation: Translating text from one language to another.

This library allows users to quickly load and fine-tune models, saving time and computational resources compared to training from scratch.

Model Hub

The Model Hub is a centralized repository where users can find, share, and upload models. With over 350,000 models available, it serves as a valuable resource for developers. Users can easily search for models based on specific tasks or requirements, making it simple to find the right tool for their projects.

Tokenizers

Tokenization is a crucial step in preparing text for model training. Hugging Face provides user-friendly tokenizers that convert text into tokens—smaller units like words or subwords. These tokenizers also handle padding and truncation, ensuring that all input sequences are uniform in length. This feature simplifies preprocessing and enhances model performance.

Datasets Library

Hugging Face offers a Datasets library that includes a wide variety of datasets for training and testing models. This library allows users to easily load datasets, perform preprocessing, and split data into training and validation sets. It streamlines the data handling process, making it easier to focus on model development.

Fine-Tuning Capabilities

One of the standout features of Hugging Face is its ability to fine-tune pre-trained models on custom datasets. This means users can adapt existing models to specific tasks or domains without starting from scratch. Fine-tuning often leads to improved accuracy and performance tailored to unique applications.

Integration with Popular Frameworks

Hugging Face Transformers supports multiple machine learning frameworks, including TensorFlow, PyTorch, and JAX. This flexibility allows developers to choose their preferred tools while leveraging the advanced capabilities of Hugging Face models.

Inference API

The Inference API enables users to deploy models easily for real-time predictions. This feature allows developers to integrate NLP capabilities into applications without extensive setup or infrastructure management.

Community Engagement

Hugging Face fosters a strong community where users can share models, datasets, and insights. This collaborative environment encourages knowledge sharing and continuous improvement of tools and resources.

Multimodal Capabilities

Beyond text processing, Hugging Face also supports multimodal tasks involving images and audio. This includes functionalities like image classification and automatic speech recognition, broadening the scope of applications for developers.

Documentation and Tutorials

Hugging Face provides comprehensive documentation and tutorials that guide users through various functionalities of the platform. This support helps both beginners and experienced developers navigate the tools effectively.

Hugging Face Transformers Performance and Accuracy

Speed and Efficiency

Hugging Face Transformers generally offers good performance, especially for inference tasks. However, some users have reported challenges with evaluation speed. For instance, one developer noted that evaluating just 20 short text inputs took about 160 seconds per step, which is considerably slow. This suggests that there might be room for optimization in certain scenarios, particularly when dealing with large datasets or complex models.

Model Accuracy

The accuracy of Hugging Face models is generally high, thanks to the use of state-of-the-art architectures and pre-training on large datasets. Many models available on the platform achieve top results on various NLP benchmarks. However, the actual accuracy can vary depending on the specific task, model, and dataset used.

Benchmarking

Hugging Face provides tools for benchmarking model performance, allowing users to measure inference speed and memory usage. This feature is valuable for comparing different models and optimizing for specific hardware constraints. However, it’s worth noting that Hugging Face’s own benchmarking tools are deprecated, and users are advised to use external benchmarking libraries for more comprehensive performance analysis.

Fine-tuning Capabilities

One of the strengths of Hugging Face Transformers is its fine-tuning capabilities. Users can take pre-trained models and adapt them to specific tasks or domains, often achieving high accuracy with relatively little training data. This flexibility contributes to the overall performance and accuracy of models in real-world applications.

Limitations and Areas for Improvement

- Resource Intensity: Some of the larger models, particularly those based on architectures like GPT, can be extremely resource-intensive. This can limit their usability on devices with constrained hardware or in scenarios requiring real-time processing.

- Bias in Pre-trained Models: As with many pre-trained models, there’s a risk of inherent biases from the training data. Users need to be aware of this and potentially implement debiasing techniques for sensitive applications.

- Complexity for Beginners: While Hugging Face aims to be user-friendly, some users find that there’s still a significant learning curve, especially for more advanced features or when troubleshooting issues.

- Documentation and Bug Fixes: Some users have reported issues with outdated documentation and the presence of bugs in the library. Improving documentation and increasing the focus on bug fixes and stability could enhance the overall user experience and potentially improve performance.

- Consistency Across Environments: There have been reports of inconsistent behavior across different environments, such as dimension switching without warning. Addressing these inconsistencies could improve reliability and ease of use.

- Optimization for Specific Tasks: While the general-purpose nature of many Hugging Face models is a strength, there’s potential for improvement in optimizing models for specific tasks or domains.

Hugging Face Transformers Pricing and Plans

Free Tier

Hugging Face provides a generous free tier that includes:

- Access to the open-source Transformers library

- Use of the Hugging Face Hub for model and dataset sharing

- Basic CPU resources for running models (2 vCPU, 16 GB memory)

- Limited access to the Inference API

This free tier allows developers to explore and use many of Hugging Face’s core features without any cost.

Pro Plan

For individual developers or small teams, Hugging Face offers a Pro plan priced at $9 per month. This plan includes:

- 1 million input characters per month for the Inference API

- 2 hours of audio processing

- One free AutoTrain project

Lab Plan

The Lab plan is designed for more intensive usage, operating on a pay-as-you-go model. This plan offers:

- Unlimited access to the Inference API (billed based on usage)

- No restrictions on audio processing

- Pay-as-you-go pricing for AutoTrain projects

Enterprise Plan

For large organizations, Hugging Face provides custom Enterprise plans. These include:

- Tailored pricing based on specific needs

- Enhanced support and security features

- Custom quotas for API usage and compute resources

Compute Resources Pricing

Hugging Face offers various compute options for tasks like model training and inference:

- CPU options range from free (2 vCPU, 16 GB memory) to $0.03/hour for upgraded resources (8 vCPU, 32 GB memory)

- GPU options include:

- Nvidia T4 (small): $0.40/hour

- Nvidia A100 (large): $4.00/hour

- TPU v5e options ranging from $1.38/hour to $11.00/hour

Cloud Marketplace Pricing

For deployments on major cloud platforms, Hugging Face Generative AI Services (HUGS) is available at $1 per hour per container on AWS and Google Cloud Platform marketplaces.

Inference Endpoints

This new service replaces the paid tier of the Inference API. Pricing for Inference Endpoints varies based on the chosen hardware and usage.

AutoTrain

AutoTrain, a service for automated model training, is available on a pay-per-use basis, with the first project free for Pro plan subscribers.

Spaces Hardware Upgrades

Users can now choose upgraded hardware for running ML demos in Spaces, with pricing based on the selected resources.

Hugging Face Transformers Integration and Compatibility

Framework Support

Hugging Face Transformers offers excellent integration with major deep learning frameworks:

- PyTorch and TensorFlow: The library provides seamless support for both PyTorch and TensorFlow. Users can easily switch between these frameworks, allowing for flexibility in model development and deployment.

- JAX: There’s also growing support for JAX, expanding the options for researchers and developers who prefer this framework.

Cloud Platform Integration

Hugging Face Transformers integrates well with major cloud platforms:

- Amazon SageMaker: AWS offers deep integration with Hugging Face, allowing users to train and deploy models using SageMaker. This includes pre-configured Deep Learning Containers that come with Hugging Face libraries pre-installed.

- Google Cloud Platform and Microsoft Azure: While not as tightly integrated as with AWS, these platforms also support the use of Hugging Face models and tools.

Mobile Development

For mobile applications, Hugging Face Transformers can be integrated through various methods:

- Model Conversion: Models can be exported to formats like ONNX or TorchScript, which are more suitable for mobile deployment.

- Lightweight Models: Hugging Face offers smaller, optimized models that are more suitable for mobile devices with limited resources.

Web Deployment

Hugging Face models can be deployed for web applications:

- Inference API: This allows developers to use Hugging Face models via API calls, making it easy to integrate NLP capabilities into web applications.

- Spaces: A platform for hosting machine learning demos, allowing easy sharing and deployment of models.

Interoperability

One of the strengths of Hugging Face Transformers is its interoperability:

- Cross-Framework Usage: Models trained in one framework (e.g., PyTorch) can often be used in another (e.g., TensorFlow) with minimal code changes.

- Model Hub: The extensive Model Hub allows for easy sharing and use of models across different projects and platforms.

Development Tools Integration

Hugging Face Transformers works well with various development tools:

- Jupyter Notebooks: Seamless integration with Jupyter environments for interactive development and experimentation.

- Version Control: Compatible with Git-based workflows, allowing for easy versioning of models and code.

Compatibility Across Devices

The library is designed to work across different computing environments:

- GPU Acceleration: Full support for GPU acceleration on NVIDIA hardware.

- CPU Compatibility: Models can run on CPU-only machines, albeit with lower performance.

- TPU Support: Growing support for Google’s Tensor Processing Units (TPUs) for high-performance computing.

Challenges and Considerations

While Hugging Face Transformers offers broad compatibility, there are some considerations:

- Resource Requirements: Large models may be challenging to run on devices with limited resources.

- Optimization Needs: For optimal performance on mobile or edge devices, models often require additional optimization or compression.

- Version Compatibility: Ensuring compatibility across different versions of Hugging Face and other integrated libraries can sometimes be challenging.

Hugging Face Transformers Customer Support and Resources

Support Channels

- GitHub Issues: For technical support or bug reports, users can create issues directly in the Hugging Face Transformers GitHub repository. This is ideal for tracking bugs, requesting features, or getting help with troubleshooting problems.

- Hugging Face Forums: The community forums are a great place to ask questions, share experiences, and discuss Transformers with other users and the Hugging Face team. It’s particularly useful for “Please explain” questions or user-specific feature requests.

- Email Support: For enterprise users or those with billing-related inquiries, Hugging Face provides direct email support. This ensures that sensitive or account-specific issues are handled appropriately and confidentially.

Additional Resources

- Documentation: Hugging Face maintains comprehensive documentation, including a quick tour and detailed guides for various tasks and models.

- Tutorials and Examples: The official repository contains maintained examples demonstrating how to approach common tasks using Transformers.

- Community-Created Content: There are numerous community-created tutorials, books, and resources available.

- Model Hub: The extensive Model Hub allows users to easily find, share, and use pre-trained models across different projects and platforms.

- Spaces: A platform for hosting machine learning demos, enabling easy sharing and deployment of models.

- AutoTrain: For users of AutoTrain Advanced, Hugging Face provides specific support channels, including GitHub issues and forum discussions.

Hugging Face Transformers Pros and Cons

Pros:

- Access to State-of-the-Art Models: Hugging Face provides easy access to a wide range of pre-trained models, including BERT, GPT, and RoBERTa, which can be quickly deployed or fine-tuned for specific tasks.

- Comprehensive Libraries: The platform offers open-source libraries that simplify tasks like model training, data processing, and tokenization.

- Community and Collaboration: Hugging Face fosters a collaborative environment where developers can share models, datasets, and applications.

- User-Friendly Tools: Features like Model Hub, Hugging Face Hub, and Inference API allow for seamless model deployment and integration.

- Fine-Tuning Capabilities: The platform enables users to adapt pre-trained models to specific domains or tasks, reducing the time and resources required for model development.

- Framework Flexibility: Hugging Face supports multiple frameworks including PyTorch, TensorFlow, and JAX, giving developers flexibility in implementation.

- Democratization of AI: By making advanced NLP models accessible, Hugging Face is helping to democratize AI technology.

Cons:

- Bugs and Inconsistencies: Users have reported numerous bugs, inconsistencies across environments, and subtle breaking changes that can cause unexpected behavior.

- Complex Configuration: The library’s configuration options can be extensive and sometimes confusing, especially for beginners.

- Documentation Issues: Some users find the documentation to be inadequate or outdated, making it challenging to troubleshoot problems.

- Performance Concerns: There have been reports of slow performance, particularly when loading the library and working with certain models.

- Steep Learning Curve: Despite efforts to be user-friendly, some users find there’s still a significant learning curve, especially for more advanced features or troubleshooting.

- Resource Intensity: Some of the larger models can be extremely resource-intensive, limiting their usability on devices with constrained hardware.

- Potential for Bias: As with many pre-trained models, there’s a risk of inherent biases from the training data, which users need to be aware of and potentially address.

- Rapid Evolution: The fast-paced development of the platform can lead to compatibility issues and the need for frequent updates to keep up with changes.

Hugging Face Transformers Comparison with Competitors

Unique Features of Hugging Face

- Extensive Model Library: Hugging Face offers a vast collection of pre-trained models, including cutting-edge models like BERT, GPT, and RoBERTa, which are accessible through its Transformers library.

- Community-Driven Platform: Hugging Face has a strong community focus, encouraging collaboration and sharing of models and datasets.

- Open-Source Ecosystem: The platform is committed to open-source principles, providing tools like the Transformers library, Datasets, and Tokenizers that are widely used by the AI community.

- Integration and Flexibility: Hugging Face supports multiple frameworks such as PyTorch, TensorFlow, and JAX, offering flexibility in model deployment and development.

- Spaces for Model Hosting: The Spaces feature allows developers to create interactive demos and share their models with the community, enhancing collaboration and visibility.

Competitors

- Amazon SageMaker

- Strengths: SageMaker excels in enterprise environments with its robust infrastructure for deploying scalable machine learning solutions. It integrates seamlessly with other AWS services, making it ideal for large-scale applications.

- Comparison: While Hugging Face focuses on ease of access to pre-trained models and community engagement, SageMaker offers more comprehensive enterprise solutions with advanced analytics capabilities.

- H2O.ai

- Strengths: Known for its AutoML platform, H2O.ai targets enterprise users across various sectors like finance and healthcare. It provides automated machine learning tools that simplify model development.

- Comparison: H2O.ai’s business model is more enterprise-focused compared to Hugging Face’s open-source community approach. Hugging Face benefits from strong network effects due to its active community.

- NLP Cloud

- Strengths: Offers an API for NLP tasks with a focus on performance and cost-effectiveness. It provides pre-loaded models that are always available, reducing latency issues.

- Comparison: NLP Cloud’s pricing model is seen as more favorable for production use compared to Hugging Face’s pay-as-you-go structure.

- spaCy

- Strengths: spaCy is a popular NLP library known for its efficiency and ease of use in production environments. It provides pre-trained pipelines for various languages.

- Comparison: spaCy is often preferred for applications requiring fast processing speeds and lower resource consumption compared to the larger models offered by Hugging Face.

- OpenAI

- Strengths: Known for developing large language models like GPT-3, OpenAI focuses on cutting-edge research in AI.

- Comparison: While OpenAI provides powerful models, Hugging Face democratizes access by offering these models as part of its open-source ecosystem.

Hugging Face Transformers Frequently Asked Questions

Hugging Face Transformers is an open-source library that provides access to state-of-the-art pre-trained models for Natural Language Processing (NLP) tasks. It offers a wide range of models, including BERT, GPT, and RoBERTa, which can be easily used for tasks like text classification, named entity recognition, and question answering. The library is designed to make it simple for developers and researchers to use and fine-tune these models for specific applications.

Hugging Face Transformers supports multiple deep learning frameworks, primarily PyTorch and TensorFlow. This flexibility allows users to work with their preferred framework. Additionally, there's growing support for JAX, expanding the options for researchers and developers. The library provides a consistent API across these frameworks, making it easy to switch between them with minimal code changes.

Using a pre-trained model from Hugging Face Transformers is straightforward. Here's a basic example:

python

from transformers import pipeline

# Load a pre-trained model for sentiment analysis

classifier = pipeline('sentiment-analysis')

# Use the model

result = classifier('I love using Hugging Face Transformers!')

print(result)

This code loads a pre-trained model for sentiment analysis and uses it to analyze a given text. The library handles the complexities of loading the model and tokenizing the input.

Yes, Hugging Face Transformers provides tools for fine-tuning pre-trained models on custom datasets. This process allows you to adapt the models to specific domains or tasks. The library offers functions and classes to simplify the fine-tuning process, including data preprocessing, training loops, and evaluation metrics. You can fine-tune models using your own labeled data to improve performance on your specific use case.

The Model Hub is a central repository where users can find, share, and upload models. It contains over 350,000 models for various NLP tasks. To use the Model Hub:

- Visit huggingface.co/models

- Search for a model that fits your task

- Use the model's name in your code to load it

For example:

python

from transformers import AutoModel, AutoTokenizer

model_name = "bert-base-uncased"

model = AutoModel.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

This code loads a pre-trained BERT model and its associated tokenizer from the Model Hub.

Hugging Face Transformers supports multilingual models that can process text in numerous languages. For instance, models like mBERT (multilingual BERT) and XLM-RoBERTa are trained on datasets covering 100+ languages. These models can perform tasks like translation, classification, and named entity recognition across multiple languages without requiring separate models for each language.

The hardware requirements depend on the size of the model and the task you're performing. Many models can run on standard CPUs, but for optimal performance, especially with larger models, a GPU is recommended. Hugging Face Transformers supports various hardware configurations:

- CPU: Suitable for smaller models and inference tasks

- GPU: Recommended for training and working with larger models

- TPU: Supported for high-performance computing needs

The library also provides tools for optimizing model performance on different hardware setups.

Yes, Hugging Face Transformers can be used in production environments. The library offers features like model quantization and pruning to optimize models for deployment. It also provides integration with serving frameworks like TensorFlow Serving and TorchServe. However, users should consider factors like model size, inference speed, and resource requirements when deploying models in production. For enterprise-grade support and additional features, Hugging Face offers paid plans and services.

Hugging Face Transformers Conclusion and Recommendation

Hugging Face Transformers has established itself as a cornerstone in the field of Natural Language Processing (NLP), offering a powerful and versatile toolkit for developers, researchers, and organizations.

Here’s a final assessment of the platform:

Key Strengths:

- Extensive Model Library: Access to a vast array of state-of-the-art pre-trained models.

- Ease of Use: Simplified APIs for quick implementation of complex NLP tasks.

- Community-Driven: Active community fostering collaboration and continuous improvement.

- Flexibility: Support for multiple frameworks and easy integration with various platforms.

- Regular Updates: Frequent updates keeping pace with the latest advancements in NLP.

Considerations:

- Learning Curve: While user-friendly, mastering advanced features can be challenging for beginners.

- Resource Intensity: Some larger models require significant computational resources.

- Rapid Evolution: Fast-paced development can lead to occasional compatibility issues.

Who Would Benefit Most:

- Researchers: Ideal for those exploring cutting-edge NLP techniques and model architectures.

- Developers: Great for quickly implementing NLP features in applications.

- Startups: Enables rapid prototyping and deployment of AI-powered solutions.

- Educational Institutions: Valuable for teaching and learning about modern NLP techniques.

- Large Enterprises: Useful for organizations looking to integrate advanced NLP capabilities into their products or services.

Overall Recommendation:

Hugging Face Transformers is highly recommended for anyone working in or exploring the field of NLP. Its blend of accessibility, performance, and community support makes it an excellent choice for a wide range of users and applications. For beginners, it offers a gentle entry point into the world of advanced NLP. For experienced practitioners, it provides tools to push the boundaries of what’s possible in language processing and generation.

However, users should be prepared to invest time in learning the platform and staying updated with its rapid developments. For those with specific enterprise needs or requiring extensive customization, it may be worth exploring additional tools or services alongside Hugging Face Transformers.

In conclusion, Hugging Face Transformers is a powerful, flexible, and community-driven platform that has rightfully earned its place as a leading tool in the NLP landscape. Whether you’re building a chatbot, analyzing sentiment, or conducting cutting-edge research, Hugging Face Transformers provides the tools and resources to bring your NLP projects to life.